My brother noticed a strange referrer for this page in his logs today (split over a few lines for convenience):

http://adtools.corp.google.com/cgi-bin/annotatedquery/annotatedquery.py?q=SMSMAIL &host=www.google.com&hl=it&gl=IT&ip=&customer_id=&decodeallads=1&ie=UTF-8 &oe=utf8&btnG=Go Annotate&sa=D&deb=a&safe=active&btnG=Go Annotate

Maybe it's pretty common, and it's just that living at the periphery of the Empire we're not too interesting for Google and never see this kind of referrers in our logs. But I'm curious anyway, and I like the fact it's obviously a Python script.

The few bits of information that can be gathered from the URL are:

- it's a Python script :) whose name seems to suggest that its purpose is to perform a query that lets you add or view annotations for a set of results or for some of them

- it's a placement ad tool for corporate clients (adtools.corp.google.com)

- it has a customer_id field, empty in this particular case

- it has a button labeled "Go Annotate", thus it probably lets you add not (or not only) view annotations

- it has a decodeallads field, so probably the query results are used to check for ad placement

My guess is a tool to aid in fine-tuning ad placement for Google's paying customers, where they can perform a query on a set of keywords, see on which of the result pages their ad would be placed, and annotate the placement for Google's staff.

I'm rewriting the mess of scripts I use to manage this site to give them more stability, something like an architecture, and to add some important features. Up until tonight I knew how to implement categories and archives, then when I was walking the dogs (which is my usual -- and only -- zen-like moment) I started wondering what should happen to archives when you are inside a category.

I have always thought of categories as just a kind of alternative, filtered index and syndication pages for new entries. It's a good feature, one that not all blogs use, which better defines entries and allows me to offer only my Python category feed to planetpython without cluttering it with unrelated stuff. And I always thought of archives as, well archives, a date-based hierarchy ordered by year/month and possibly day, where for each date you have an index page offering different levels of details on the relevant entries, eg a blog/2004/ page with entry titles, a blog/2004/January page with titles and abstracts, and so on.

But what should happen when you're looking at a category page, and click on an archive link? Should you get a filtered archive page displaying only Python entries for that period? Does it make any sense, would it be useful? Or are archives just "storage", a different sort of categorization, unrelated to categories? Is it enough to show the entries' categories in the archives pages (eg next to the tile in the year view), to allow people to "visually" filter out entries?

Tangled in these deep thoughts, I came back home and had a look at a few well known blogs. Guess what? Everybody does things differently. Some blogs have categories, some archives, some both but categories are just index pages with no effect on archives, etc. Since I will be generating static .html pages the difference in implementing category archives will be huge, not in terms of code but in terms of time taken to rebuild the site, and load on the storage backend. But then you won't need to rebuild the site that much (publishing or modifying an entry means regenerating only the relevant pages), and you might gain something in search engines visibility by more deeply crosslinking your site. Uhm.....

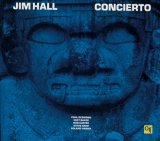

I'm at work trying to decide what to do about the Swiss job (the offer is so-so, and they're rushing things a bit too much for me to feel comfortable), compiling a UML editor for Linux, and listening to "Concierto de Aranjuez" from Jim Hall's Concierto. It's a sad, beautiful, and romantic song composed by Spanish composer Joaquin Rodrigo Vidre between 1938 and 1939, and performed by Jim Hall accompanied by a stunning Jazz supergroup formed by Chet Baker, Paul Desmond, Roland Hanna and Ron Carter. It doesn't help my already blue mood, but it's definitely a masterpiece.

As I cannot sleep since I got woken up by a loud burglar alarm nearby, I might as well post something new here. Recently I had the need to display hits on a web site grouped by HTTP status code, so to turn a quick few lines of code into something a bit more interesting, I decided to display each status code in the summary cross-linked to the relevant part of the HTTP 1.1 specification.

The spec looks like a docbook-generated HTML set of pages (just a guess) with sections and subsections each having a unique URL built following a common rule, so once you have a dict of status codes:section numbers, it's very easy to build the links:

HTTP_STATUS_CODES = {

100: ('Continue', '10.1.1'),

101: ('Switching Protocols', '10.1.2'),

200: ('OK', '10.2.1'),

201: ('Created', '10.2.2'),

202: ('Accepted', '10.2.3'),

203: ('Non-Authoritative Information', '10.2.4'),

204: ('No Content', '10.2.5'),

205: ('Reset Content', '10.2.6'),

206: ('Partial Content', '10.2.7'),

300: ('Multiple Choices', '10.3.1'),

301: ('Moved Permanently', '10.3.2'),

302: ('Found', '10.3.3'),

303: ('See Other', '10.3.4'),

304: ('Not Modified', '10.3.5'),

305: ('Use Proxy', '10.3.6'),

306: ('(Unused)', '10.3.7'),

307: ('Temporary Redirect', '10.3.8'),

400: ('Bad Request', '10.4.1'),

401: ('Unauthorized', '10.4.2'),

402: ('Payment Required', '10.4.3'),

403: ('Forbidden', '10.4.4'),

404: ('Not Found', '10.4.5'),

405: ('Method Not Allowed', '10.4.6'),

406: ('Not Acceptable', '10.4.7'),

407: ('Proxy Authentication Required', '10.4.8'),

408: ('Request Timeout', '10.4.9'),

409: ('Conflict', '10.4.10'),

410: ('Gone', '10.4.11'),

411: ('Length Required', '10.4.12'),

412: ('Precondition Failed', '10.4.13'),

413: ('Request Entity Too Large', '10.4.14'),

414: ('Request-URI Too Long', '10.4.15'),

415: ('Unsupported Media Type', '10.4.16'),

416: ('Requested Range Not Satisfiable', '10.4.17'),

417: ('Expectation Failed', '10.4.18'),

500: ('Internal Server Error', '10.5.1'),

501: ('Not Implemented', '10.5.2'),

502: ('Bad Gateway', '10.5.3'),

503: ('Service Unavailable', '10.5.4'),

504: ('Gateway Timeout', '10.5.5'),

505: ('HTTP Version Not Supported', '10.5.6')}

def getHTTPStatusUrl(status_code):

if not status_code in HTTP_STATUS_CODES:

return None

description, section = HTTP_STATUS_CODES[status_code]

return '''<a

href="http://www.w3.org/Protocols/rfc2616/rfc2616-sec10.html#sec%s"

>%s - %s</a>''' % (section, status_code, description)

To get and parse the server log files, and store them in MySQL I'm using a small app I wrote with a colleague at work in Python (what else?), which started as a niche project and may become a company standard. The app uses components for both the log getting (currently http:// and file://) and parsing (our apps custom logs, and apache combined), uses HTTP block transfers to get only new log records, and a simple algorithm to detect log rotations and corruptions. The log records may be manipulated before handing them off to the db so as to insert meaningful values (eg to flag critical errors, etc.). The app has a simple command line interface, uses no threading as it would have complicated development too much (we use the shell to get registered logs and run one instance for each log), and has no scheduler, as cron does the job very well. At work, it is parsing the logs of a critical app on a desktop pc, handling something like 2 million records in a few hours, and it does it so well we are looking into moving it to a bigger machine (maybe an IBM Regatta) and DB2 (our company standard), to parse most of our applications logs and hand off summaries to our net management app. It still needs some work, but we would like to release its code soon. If you're interested in trying it, drop me a note.

I'm on the train to Switzerland for a job interview (no I'm not unemployed, I have a good well-paid -- by Italian standards -- pretty comfortable job with a huge solid company, but I'd like to go work abroad again), reading stuff I dumped this morning at home before leaving on my newly resurrected iPAQ*. Apart from Danah Boyd's Social Software, Sci-Fi and Mental Illness which was mentioned on Joel's latest entry, the only other interesting thing to read was Russ's sudden discovery of Perl.

I was a bit surprised to read that a Java developer with some exposure to Python (mainly due to his obsession with Series 60 phones, which sooner or later will get Python as their default scripting language) can suddenly discover Perl and even like it. I was more surprised when I read that a good part of Russ's sudden love for Perl is due to "Python's horrible documentation.[..] Python's docs were just half-ass and bewildering to me". . Horrible? How's that?

I've used my share of languages these past 10 years (and Perl was the first I loved), and I've never found anything as easy to learn and use as Python. The docs are a bit concise but well written, and they cover the base language features and all the standard library modules with a well-organized layout. And if the standard docs are not enough, you can always go search comp.lang.python, read PEPs on specific topics, read AMK's "What's new in Python x.x" for the current and older versions of Python, keep the Python Quick Reference open, or buy the Python Cookbook (which may give you the right to ask Alex about some obscure Python feature for the 1000th time on clp or iclp and get an extra 20 lines in his replies). And as for examples, you don't see many of them in the docs because Python has an interactive interpreter, and very good built-in introspection capabilities. So usually when you have to deal with a new module you skim the docs then fire up the interpreter, import the module, and start poking around to see how to use it. And while you're at the interpreter console, remember that dir(something) and help(something) are your friends. Maybe the Perl and Perl modules docs are so good (are they, really? I can't remember) because you would be utterly lost in noise without them, as Russ seems to notice in dealing with special variables, something that still gives me nightmares from time to time.

So I don't really get Russ's sudden love for Perl, nor am I overexcited by learning that he got invited to Foo Camp, as I'm usually not much into the "look how important/well known/an alpha geek I am" stuff (probably due to my not being important, or well known, and definitely not an alpha anything, apart for my dogs).

Rereading this entry after having posted it, and before I forget about Perl for next (hopefully many) years, I have to say that if despite everything you're really into Perl you should have a look at Damian Conway's excellent Object Oriented Perl, one of the best programming books I have read.

BTW, I live 50 kms away but I always forget how green Switzerland is. Oh, and I should remember to book a place in a non-smoking car next time, so as to lower my chances of sitting next to an oldy lady smoking Gauloises, exploding in bursts of loud coughing every odd minute, and traveling with super-extra-heavy suitcases she cannot move half an inch, let alone raise over the seats.

update This morning I found a reply by Russ in my inbox. I'm not an expert in the nuances of written English, but he did not sound happy with my post. I guess the adrenaline rush of going to a long interview in three different languages in a foreign country (not to say being a bit envious of work conditions overseas) made me come out harsher than I wanted to. Or maybe I just like pissing people off when I have nothing better to do, and they trumpet false opinions to the whole world (something I... uhm... never did). Anyway, it turns out Russ could not find the Python Library Reference, to learn how to use the mailbox module to parse a Unix mailbox. I guess sometimes it just pays to look twice, especially if not looking involves Perl.

As usual on my site, comments are disabled. I'm too lazy to add them to my homegrown blogging thing, and I have no time for dealing with comment spam. If you feel like you have something worthwile to say, reply to Russ's post on his blog, email me, or just get busy on something else for a few minutes then try to remember what you wanted to say, maybe this is not such an interesting topic as you thought.

update October 18, 2004 With all the excitement on podcasts these past weeks, which involved starting a new site and doing my own podcasts for Qix.it, I forgot to mention an email from Pete Prodoehl about the comments I make on US versus European work conditions and salaries in the footnote below. Pete writes:

Ludo, I certainly don't have the disposable income that many US bloggers seem to have. I know what you mean though. I constantly seem to come across posts where people say they handed down their old 20gig iPod because the got a new 40gig iPod.Thanks Pete, it's nice to learn that not all Americans live in Eden (I should know that myself, having lived there for a year, but it was a long time ago and things might have changed).

Honestly, it all sounds crazy to me... Then again, I live in the US Midwest, maybe things are different here as well. ;)

* I don't have the money to buy a new PDA (how do you people in the US manage to buy so many gadgets and crap anyway? don't you have to pay rent/bills/taxes/etc? aren't you hit by the recession or is it something only we Italians have to live with?), so I resurrected my iPAQ 3850 which had been lying around thinking itself a brick for ages, got a new battery off ebay, wiped out that sorry excuse for an operating system which is PocketPC, installed OPIE/Opie Reader/ JpluckX and soon had a working PDA again. I did not remember how much I missed having one, a smartphone is not the same thing. Now if only I had the money to buy a Zaurus SL-C860... back

One of today's Boing Boing posts links to an interesting entry on Nanotech titled The Kabbalah Nanotech Connection. I've always been fascinated by Jewish misticism (especially by Hasidism), so I went and read Nanotech's blog entry. What intrigued me most about it though is not its main topic, but its mention of Temple Grandin, an autistic scientist who published (among other things) a very interesting book titled Thinking in Pictures (you can read Chapter 1 online). I've always known my thought processes were highly visual, but I never knew that visual thinking is one of the distinctive traits of autism. So I googled a bit, and came up with a paper by Lesley Sword titled I Think in Pictures, You Teach in Words: The Gifted Visual Spatial Learner which defines a few distinctive traits of "gifted visual spatial learners", among them "very sensitive hearing [they] can hear sounds that would simply be background noise for other people". I still remember when I heard the foot of a soccer player struck the ball 60 or 70 metres away despite the background noise of 80.000 people around me.....